HomeAB TEST | Conversion Rate Optimization Services | NextCore Media

AB Test Significance, Debunked

your AB test’s significance

Enter the data from your “A” and “B” pages into the AB test calculator to see if your results have reached statistical significance. Most AB testing experts use a significance level of 95%, which means that 19 times out of 20, your results will not be due to chance.

AB Test Significance:

What Do My Results Mean?

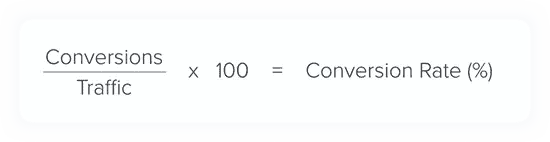

Conversion Rate

AB testing is the best way to make sure you increase your conversion rate in the long run.

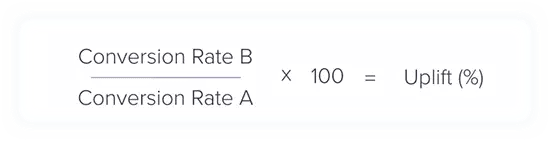

Uplift

AB Test Significance

Within AB testing statistics, your results are considered “significant” when they are very unlikely to have occurred by chance. Achieving statistical significance with a 95% Confidence Level means you know that your results will only occur by chance once in every 20 times.

P-Value

AB Test Significance:

How Do I Get Significant Results?

- Increase your sample size

- Create a larger Uplift

- Produce more consistent data (with less variance)

Practical Steps To Increase Your Sample Size

1) Run your tests for longer, risking the chance that your data will be polluted. Most browsers delete any cookies within one month (some delete them after two weeks). Since AB testing tools use cookies to sort your visitors into group A and group B, long tests run the risk of cross-sampling.

Practical Steps To Create a Larger Uplift

2) One of the most successful variations to try is a version of your webpage with persuasive notifications installed. These grab your visitors’ attention and can be used to create psychological effects such as Social Proof, FOMO and Urgency.

3) Another way to create Uplift is to reduce Friction on your page. You can do this by removing the number of fields in a form, adding helpful text or visual cues, or with Friction notifications.

How to produce more consistent data

AB Test Significance:

What To Do When You Reach Significance

- Statistical significance does not mean practical importance. There may be other things you should change first.

- Even AB test with statistical significance can still be false positives. It’s best to change things gradually.

- Optimising one part of your website can have a negative effect on another part, and optimising for one metric (conversions) may have a negative effect on another metric (return customers).

Classic Errors with AB Test Significance

- Thinking significance is the likelihood that your B page is better than your A page. In fact, it is only the probability that your results are not due to chance.

- Thinking a significant result “proves” that one approach is better than another. You can’t prove such a generalised hypothesis with AB testing. Instead, you can show that:There is a significant probability that an Uplift occurred during your test, with your given sample and in the context of your website.

- Thinking that your customers “preferred” the B version of your page. All you are measuring is the impact your changes have on your customers’ behaviour – not how it affects their perceptions.

AB Test Significance, Debunked

your AB test’s significance

Enter the data from your “A” and “B” pages into the AB test calculator to see if your results have reached statistical significance. Most AB testing experts use a significance level of 95%, which means that 19 times out of 20, your results will not be due to chance.

AB Test Significance:

What Do My Results Mean?

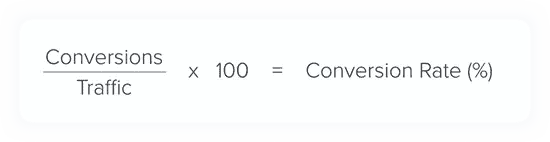

Conversion Rate

AB testing is the best way to make sure you increase your conversion rate in the long run.

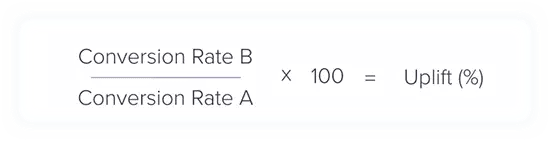

Uplift

AB Test Significance

Within AB testing statistics, your results are considered “significant” when they are very unlikely to have occurred by chance. Achieving statistical significance with a 95% Confidence Level means you know that your results will only occur by chance once in every 20 times.

P-Value

AB Test Significance:

How Do I Get Significant Results?

- Increase your sample size

- Create a larger Uplift

- Produce more consistent data (with less variance)

Practical Steps To Increase Your Sample Size

1) Run your tests for longer, risking the chance that your data will be polluted. Most browsers delete any cookies within one month (some delete them after two weeks). Since AB testing tools use cookies to sort your visitors into group A and group B, long tests run the risk of cross-sampling.

Practical Steps To Create a Larger Uplift

2) One of the most successful variations to try is a version of your webpage with persuasive notifications installed. These grab your visitors’ attention and can be used to create psychological effects such as Social Proof, FOMO and Urgency.

3) Another way to create Uplift is to reduce Friction on your page. You can do this by removing the number of fields in a form, adding helpful text or visual cues, or with Friction notifications.

How to produce more consistent data

AB Test Significance:

What To Do When You Reach Significance

- Statistical significance does not mean practical importance. There may be other things you should change first.

- Even AB test with statistical significance can still be false positives. It’s best to change things gradually.

- Optimising one part of your website can have a negative effect on another part, and optimising for one metric (conversions) may have a negative effect on another metric (return customers).

Classic Errors with AB Test Significance

- Thinking significance is the likelihood that your B page is better than your A page. In fact, it is only the probability that your results are not due to chance.

- Thinking a significant result “proves” that one approach is better than another. You can’t prove such a generalised hypothesis with AB testing. Instead, you can show that:There is a significant probability that an Uplift occurred during your test, with your given sample and in the context of your website.

- Thinking that your customers “preferred” the B version of your page. All you are measuring is the impact your changes have on your customers’ behaviour – not how it affects their perceptions.